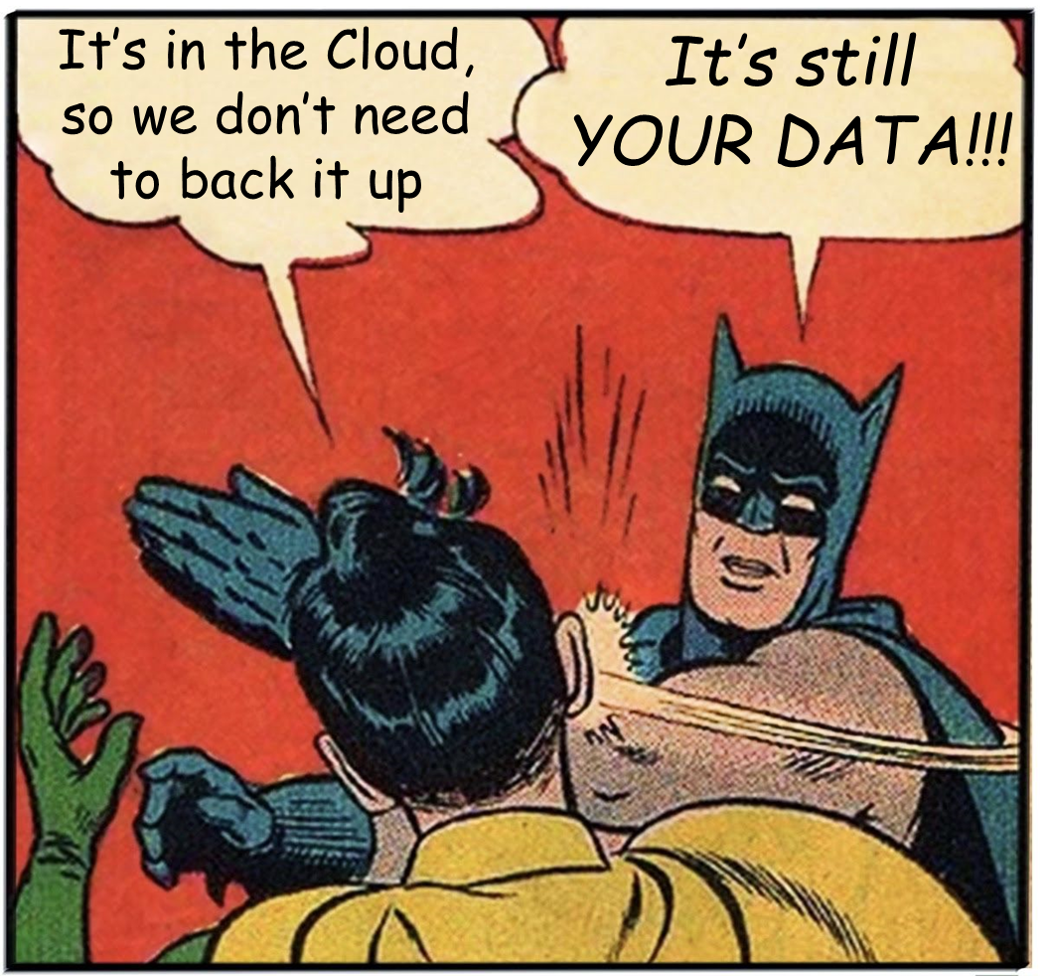

World Backup Day PSA: No one cares about (or relies upon) your data more than you do

Happy #WorldBackupDay ! In COVID-times, we often hear about “the New Normal” to remind us that things won’t entirely go back to the way that they were. But for World Backup Day (WBD), I’d like to remind you of an “Old Normal” that has not changed – your data is still YOUR DATA. I’ve been in … Read more