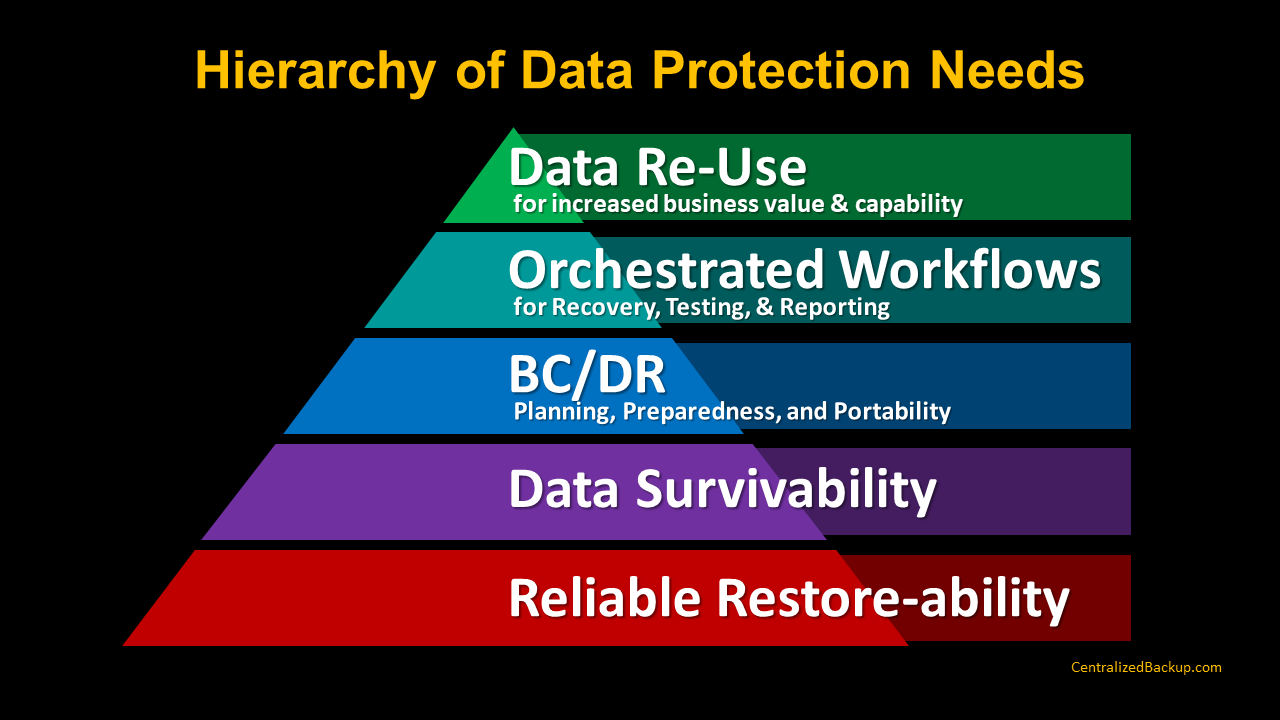

In 1943, Maslow’s Pyramid of Needs described a hierarchy within psychology, whereby until you addressed foundational elements such as Food/Water … you can’t feel safe, you can’t find love, you struggle with self-respect, etc. Data protection actually has a similar hierarchy (starting from the bottom):

Reliable Restore-ability

The most basic need of any data protection scenario is the assured ability to recover your data. If you aren’t confident in your ability to reliably recover your data, then the DP solution has no purpose at all.

The answer to this need is better backups … but not just backups, but combining backups with snapshots and replication. And doing that for your workloads that are physical servers on premises, virtual machines on-prem and cloud-hosted, as well as native cloud software. Wherever your workloads are today (or will be six months from now), the requirements to protect them persevere.

Ask yourself “Can I reliably restore my stuff ?” And just like Maslow’s Needs, until you address this foundation, you really can’t move up the stack.

Data Survivability

Get your data out of the building! For the last 30 years, that has meant tape for many of us. Today, many are leaping into clouds … but please, get your data out of the building. I do want to point out that “the cloud” does not and should not entirely replace or kill tape. In most organizations, 85%+ of your data does not have long-term requirements.

- Yes, you should keep versions of almost everything for perhaps a year or so, to mitigate cyber-attacks and folks that overwrite last year’s stuff with this year’s – so maybe 15 months.

- There is a good amount of data that really could have unlockable value – so for data mining and analytics, I could foresee 2, 3, up to 5 year retention using the cloud

- For 10+ year retention, for what might be 5-10% of your data that actually has mandates related to long term retention or regulatory compliance (patient lifespans, patent/research histories, executive and legal directives, etc. … TAPE, long-lasting, portable, durable, modern tape.

Now if you (or your boss’s boss) truly doesn’t like tape – then maybe consider a cloud service provider that will do a monthly tape for you? Else, send everything to a cloud (or two). At the end of the day, get your data out of the building, so that your data survives … so that you have a better chance of ensuring that your business survives.

BC/DR — Business Continuity & Disaster Recovery

There are at least four aspects of BC/DR to consider:

DR and BC aren’t the same. DR is taking the survivable data and the reliable restore-ability and executing it somewhere else … whereas BC focuses first and foremost on the business processes. Real BC/DR is grounded in understanding the business processes and correlating their dependencies on IT systems.

Planning & Expertise: Understanding and quantifying operational processes, IT dependencies, business impacts, etc. is critical. That expertise likely won’t come from your Backup Admin, so seek outside expertise that can objectively help you understand your processes and then plan to mitigate IT interruptions accordingly.

Preparedness: recognize that if a server was running in Dallas, but now it’s been spun up in Boston or just in “some cloud,” the users won’t just reconnect. Networking, especially with cloud-hosted DRaaS, but really any multi-site topology, requires connectivity that necessitates significant pre-planning and testing. Do not underestimate this.

BC/DR is NOT just about earthquakes and fires, its about continuity of operations. Most interruptions of IT service can actually be foreseen in platform migrations. IT wants to move your servers from physical to virtual, from Dallas to Boston, from HyperV to VMware, or from on-prem to cloud-hosted … they are all server moves, which (left to old-school methods) will result in outages … albeit planned outages. Instead, think of migrations, including P2V, V2C, or C2V as all being interruptions to your business… so your agility to mitigate downtime by using those same rapid and reliable recovery mechanisms that would recover from a flooded server room can enable you to move from your data center to a cloud … or in between clouds … or back on-prem again. Otherwise, you are creating your own outages; don’t do that.

Orchestrated Workflows

When we get past tactical restorability and the assurance of survivability … you start to see a lot of process-centric capabilities that are encompassed within BC/DR. Here is the problem, humans are not good at repetitive tasks – especially complex repetitive tasks.

So, get the humans out of the way. Humans are great at defining strategy, establishing goals, and creating the process steps. After that, you should be looking for orchestration where you can develop workflows for each of those recovery tasks … then orchestrate running those recovery workflows on a regular basis … and deriving reports that identify what is working and what needs improvement … that is where humans can get involved again.

Data Re-Use

In Maslow’s hierarchy, the top of the pyramid is Self-Actualization … becoming the best possible you. In this pyramid, the pinnacle (the best possible) Data Protection is Data Management.

The first four layers are a great set of capabilities, and frankly those outcomes are better that what most organizations have today. But that level of investment in effort and planning and hardware and software has a cost. The ROI of most legacy approaches to data protection were to simply quantify downtime and data loss … and then compare that with the cost of the data protection hardware, software, services, and labor. Any way you look at it, it’s a big investment; but what if you could do more?

- You already have a data protection solution that can reliably recover your data.

- You can recover your data to a location other than where the data typically resides.

- You can automate the connectivity to that data and orchestrate tasks.

So, what if you did those orchestrated restores into a sandbox (a test area) that wasn’t visible to the production network? Automate bringing the data in and enabling connectivity to the secondary instance, so that now, others have access to a benign copy of the data while the production data continues unscathed?

- You could test patches or new application versions without affecting production. If the new build breaks the test environment, you’ll be glad that you didn’t roll it out.

- You could enable DevOps to test their applications with live data. Every morning, they come into work and yesterday’s live data is ready for them to play with.

- You could provide access to AI/ML or other data mining tools.

- You could provide access to regulatory compliance auditors.

This applies to any scenario where you are enabling others to do their jobs better, because they now have access to production data without affecting the production environment. Every one of those scenarios creates business value because it creates a positive benefit to the business. Now, your ROI for data protection is not “just” the mitigation of downtime and data loss, but also the acceleration to the business with these new capabilities.

Like in Maslow’s Pyramid, where you often can’t be who you want to be if you don’t first meet some foundational needs, you can’t get to the data management that you want, without first addressing the foundational needs of data protection.

Please let me know your thoughts.